Software Deduplication: Quick comparison of save ratings

Posted 2015-07-31 17:59 by Traesk. Edited 2016-03-30 04:03 by Traesk. 2905 views.

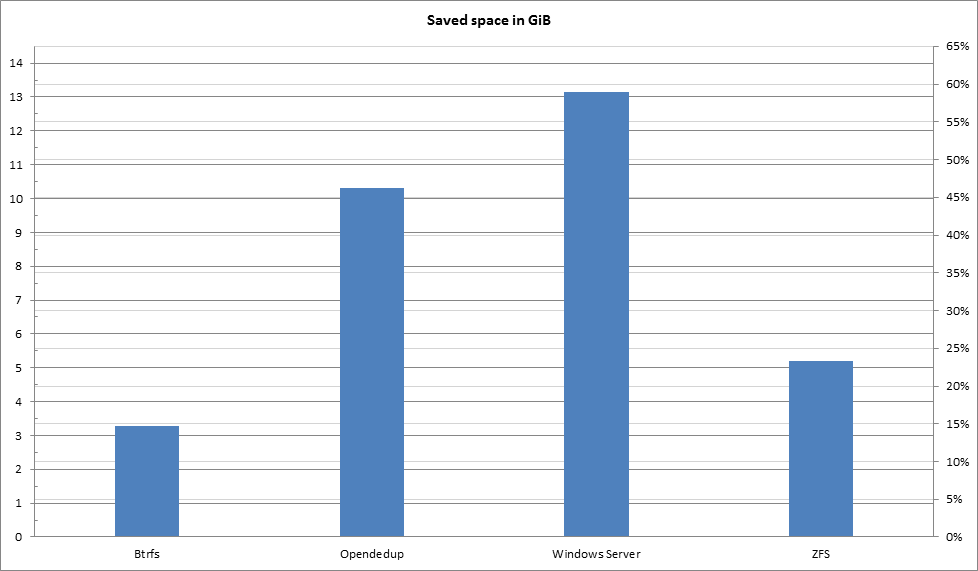

Purpose: To see if any of the alternatives is significantly better or worse, and ultimately decide what OS/filesystem to potentially use on a fileserver.

Scope/limitations: This test does not consider factors other than the actual save ratings, such as performance, security, and cost. The method is very limited and might not necessarily reflect the results in a real-world scenario. There might be some minor difference in the results due to rounding and possibly inconsistent variables used. This is the first time I get in touch with most of these systems, so the results are probably not optimized. The results were pretty clear though, so +/- some percent shouldn't matter.

Method: I used 22.3GiB worth of Windows XP installation ISOs, 52 ISOs in total. No file was exactly the same, but some contained much duplicate data, like the Swedish XP Home Edition vs the Swedish N-version of XP Home Edition. I deduplicated these files and noted how much space I saved compared to the 22.3GiB.

Please see the updated results!

Introduction

Duplication is the method of removing duplicate data in files. That way, if you have two similar files, theoretically only the "new" or "different" data takes up space in the second file.There are two ways to do deduplication; "post-process" and "in-line" (sometimes called by other names). Post-process scans the files after they are copied and then removes duplicate data. In-line compares the files before they are written, so duplicate data are never written at all. In-line need much RAM to keep all necessary information available, but should copy identical files much faster as duplicate data of the second file doesn't have to be written to the disk.

Btrfs

The filesystem Btrfs supports post-process deduplication, but you need a tool that tells it what do dedup. There seems to be two alternatives: bedup and duperemove. Bedup only do whole-file-deduplication (I also verified this by running the tool, with no space savings as a result), rather than chunks, so it's not really what we are looking for.General info

Duperemove v0.09 was used in this test.Ubuntu 14.10

uname -aLinux hostname 3.16.0-30-generic #40-Ubuntu SMP Mon Jan 12 22:06:37 UTC 2015 x86_64 x86_64 x86_64 GNU/Linuxbtrfs --versionBtrfs v3.14.1blockdev --getbsz /dev/sda34096Testing

Used space before running duperemove:df /dev/sda3 --block-size=MFilesystem 1M-blocks Used Available Use% Mounted on

/dev/sda3 82969M 22935M 58008M 29% /media/traesk/020d9e3e-0a61-4d06-8b78-c5b4dd975bd9duperemove -rdh /media/traesk/020d9e3e-0a61-4d06-8b78-c5b4dd975bd9/df /dev/sda3 --block-size=MFilesystem 1M-blocks Used Available Use% Mounted on

/dev/sda3 82969M 19611M 61325M 25% /media/traesk/020d9e3e-0a61-4d06-8b78-c5b4dd975bd9duperemove -rdh -b 64k /media/traesk/020d9e3e-0a61-4d06-8b78-c5b4dd975bd9/df /dev/sda3 --block-size=MFilesystem 1M-blocks Used Available Use% Mounted on

/dev/sda3 82969M 19468M 61469M 25% /media/traesk/020d9e3e-0a61-4d06-8b78-c5b4dd975bd9duperemove -rdh -b 4k /media/traesk/020d9e3e-0a61-4d06-8b78-c5b4dd975bd9/Saved space with 128K block size: (22835.2-19611)/22835.2= 14.1%

Saved space with 64K block size: (22835.2-19468)/22835.2= 14.7%

I find it interesting that the log says:

Using 128K blocks

Using hash: SHA256

Using 2 threads for file hashing phase

[…]

Kernel processed data (excludes target files): 4.0G

Comparison of extent info shows a net change in shared extents of: 7.4GOpendedup

Opendedup is a Java-based file system on top of your regular file system that does in-line deduplication.General info

sdfs-2.0.10_amd64.deb was used in this test. Underlying filesystem was Btrfs.Testing

Default settingsmkfs.sdfs --volume-name=tank --volume-capacity=100gb --base-path=/media/traesk/ee291934-34db-448c-98cb-c96447c29259/

mount.sdfs tank /media/tanksdfscli --volume-infoVolume Capacity : 100 GB

Volume Current Logical Size : 22 GB

Volume Max Percentage Full : 95.0%

Volume Duplicate Data Written : 11 GB

Unique Blocks Stored: 10 GB

Unique Blocks Stored after Compression : 10 GB

Cluster Block Copies : 2

Volume Virtual Dedup Rate (Unique Blocks Stored/Current Size) : -9.96%

Volume Actual Storage Savings (Compressed Unique Blocks Stored/Current Size) : 50.92%

Compression Rate: 0.0%df -hFilesystem Size Used Avail Use% Mounted on

/dev/sda3 75G 12G 59G 17% /media/traesk/ee291934-34db-448c-98cb-c96447c292591

sdfs:/etc/sdfs/tank-volume-cfg.xml:6442 101G 11G 90G 11% /media/tankmkfs.sdfs --volume-name=tank --volume-capacity=100gb --hash-type=VARIABLE_MURMUR3 --base-path=/media/traesk/ee291934-34db-448c-98cb-c96447c29259/

mount.sdfs tank /media/tanksdfscli --volume-infoVolume Capacity : 100 GB

Volume Current Logical Size : 22 GB

Volume Max Percentage Full : 95.0%

Volume Duplicate Data Written : 12 GB

Unique Blocks Stored: 9 GB

Unique Blocks Stored after Compression : 9 GB

Cluster Block Copies : 2

Volume Virtual Dedup Rate (Unique Blocks Stored/Current Size) : -8.94%

Volume Actual Storage Savings (Compressed Unique Blocks Stored/Current Size) : 56.97%

Compression Rate: 3.31%df -hFilesystem Size Used Avail Use% Mounted on

/dev/sda3 75G 12G 59G 17% /media/traesk/28b8182f-185b-41b7-a117-b667e6d78f09

sdfs:/etc/sdfs/tank-volume-cfg.xml:6442 101G 9,7G 91G 10% /media/tankSavings with Variable block deduplication: (22.3-12)/22.3 = 46.2%

I should have gotten df as megabyte instead for this one. Also, seems Opendedup use some storage on the underlying filesystem as well, that's probably why my calculations show a lower savings rate than sdfscli does.

Windows

In Windows Server 2012 Microsoft introduced post-process deduplication for NTFS. Note that the new filesystem ReFS is not supported.General info

Windows Server 2012 R2 with Update 1, otherwise untouched.Testing

Enable-DedupVolume e:

Set-DedupVolume e: -MinimumFileAgeDays 0

Start-DedupJob e: –Type Optimization

Start-DedupJob e: -Type GarbageCollection

Start-DedupJob e: -Type ScrubbingGet-WmiObject win32_logicaldisk -filter "DeviceID='E:'" | ForEach-Object {($_.size - $_.freespace) / 1GB}9,1517219543457Get-DedupVolume | Format-ListVolume : E:

VolumeId : \\?\Volume{bb855b83-aeed-11e4-80b5-000c29fa07e3}\

Enabled : True

UsageType : Default

DataAccessEnabled : True

Capacity : 50.94 GB

FreeSpace : 41.78 GB

UsedSpace : 9.15 GB

UnoptimizedSize : 22.67 GB

SavedSpace : 13.52 GB

SavingsRate : 59 %

MinimumFileAgeDays : 0

MinimumFileSize : 32768

NoCompress : False

ExcludeFolder :

ExcludeFileType :

ExcludeFileTypeDefault : {edb, jrs}

NoCompressionFileType : {asf, mov, wma, wmv...}

ChunkRedundancyThreshold : 100

Verify : False

OptimizeInUseFiles : False

OptimizePartialFiles : FalseZFS

ZFS is another filesystem capable of deduplication, but this one does it in-line and no additional software is required. Originally part of Sun OpenSolaris, it was made closed-source when Oracle took over. This led to the creation of OpenZFS which is now (obviously) developed independently of Oracle's version.General info

Ubuntu 14.10uname -aLinux hostname 3.16.0-30-generic #40-Ubuntu SMP Mon Jan 12 22:06:37 UTC 2015 x86_64 x86_64 x86_64 GNU/Linuxzpool get version tankNAME PROPERTY VALUE SOURCE

tank version - defaultzfs get version tankNAME PROPERTY VALUE SOURCE

tank version 5 -dmesg | grep -E 'SPL:|ZFS'[ 1383.325360] SPL: Loaded module v0.6.3-14~utopic

[ 1383.612907] ZFS: Loaded module v0.6.3-15~utopic, ZFS pool version 5000, ZFS filesystem version 5

[ 2530.532362] SPL: using hostid 0x007f0101uname -aSunOS hostname 5.11 11.2 i86pc i386 i86pczpool get version tankNAME PROPERTY VALUE SOURCE

tank version 35 defaultzfs get version tankNAME PROPERTY VALUE SOURCE

tank version 6 -Testing

Ubuntu: Deduplication on, compression offzpool create -O dedup=on tank /dev/sda3zdb -DD tankDDT-sha256-zap-duplicate: 31239 entries, size 274 on disk, 142 in core

DDT-sha256-zap-unique: 116596 entries, size 282 on disk, 152 in core

DDT histogram (aggregated over all DDTs):

bucket allocated referenced

______ ______________________________ ______________________________

refcnt blocks LSIZE PSIZE DSIZE blocks LSIZE PSIZE DSIZE

------ ------ ----- ----- ----- ------ ----- ----- -----

1 114K 14.2G 14.2G 14.2G 114K 14.2G 14.2G 14.2G

2 30.2K 3.78G 3.78G 3.78G 63.8K 7.97G 7.97G 7.97G

4 306 38.2M 38.2M 38.2M 1.20K 153M 153M 153M

8 1 128K 128K 128K 12 1.50M 1.50M 1.50M

16 2 256K 256K 256K 47 5.88M 5.88M 5.88M

Total 144K 18.0G 18.0G 18.0G 179K 22.3G 22.3G 22.3G

dedup = 1.24, compress = 1.00, copies = 1.00, dedup * compress / copies = 1.24zpool create -O dedup=on -O compression=on tank /dev/sda3zdb -DD tankDDT-sha256-zap-duplicate: 31238 entries, size 279 on disk, 143 in core

DDT-sha256-zap-unique: 116596 entries, size 283 on disk, 151 in core

DDT histogram (aggregated over all DDTs):

bucket allocated referenced

______ ______________________________ ______________________________

refcnt blocks LSIZE PSIZE DSIZE blocks LSIZE PSIZE DSIZE

------ ------ ----- ----- ----- ------ ----- ----- -----

1 114K 14.2G 13.8G 13.8G 114K 14.2G 13.8G 13.8G

2 30.2K 3.78G 3.63G 3.63G 63.8K 7.97G 7.67G 7.67G

4 306 38.2M 36.3M 36.3M 1.20K 153M 145M 145M

8 1 128K 4.50K 4.50K 12 1.50M 54K 54K

16 1 128K 4.50K 4.50K 24 3M 108K 108K

Total 144K 18.0G 17.5G 17.5G 179K 22.3G 21.6G 21.6G

dedup = 1.24, compress = 1.03, copies = 1.00, dedup * compress / copies = 1.28zpool create -O compression=on -O dedup=on tank c2t1d0df -k tankFilesystem 1024-blocks Used Available Capacity Mounted on

tank 30859235 22681047 8134226 74% /tankzdb -DD tankDDT-sha256-zap-duplicate: 31238 entries, size 278 on disk, 143 in core

DDT-sha256-zap-unique: 116596 entries, size 283 on disk, 148 in core

DDT histogram (aggregated over all DDTs):

bucket allocated referenced

______ ______________________________ ______________________________

refcnt blocks LSIZE PSIZE DSIZE blocks LSIZE PSIZE DSIZE

------ ------ ----- ----- ----- ------ ----- ----- -----

1 114K 14.2G 13.8G 13.8G 114K 14.2G 13.8G 13.8G

2 30.2K 3.78G 3.63G 3.63G 63.8K 7.97G 7.67G 7.67G

4 306 38.2M 36.3M 36.3M 1.20K 153M 145M 145M

8 1 128K 4.50K 4.50K 12 1.50M 54K 54K

16 1 128K 4.50K 4.50K 24 3M 108K 108K

Total 144K 18.0G 17.5G 17.5G 179K 22.3G 21.6G 21.6G

dedup = 1.24, compress = 1.03, copies = 1.00, dedup * compress / copies = 1.28zpool list tankNAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT

tank 25.8G 17.5G 8.22G 68% 1.23x ONLINE -zpool create -O dedup=on -O compression=gzip-9 tank /dev/sda3zdb -DD tankDDT-sha256-zap-duplicate: 31238 entries, size 279 on disk, 142 in core

DDT-sha256-zap-unique: 116596 entries, size 285 on disk, 151 in core

DDT histogram (aggregated over all DDTs):

bucket allocated referenced

______ ______________________________ ______________________________

refcnt blocks LSIZE PSIZE DSIZE blocks LSIZE PSIZE DSIZE

------ ------ ----- ----- ----- ------ ----- ----- -----

1 114K 14.2G 13.5G 13.5G 114K 14.2G 13.5G 13.5G

2 30.2K 3.78G 3.55G 3.55G 63.8K 7.97G 7.51G 7.51G

4 306 38.2M 35.3M 35.3M 1.20K 153M 141M 141M

8 1 128K 512 512 12 1.50M 6K 6K

16 1 128K 512 512 24 3M 12K 12K

Total 144K 18.0G 17.1G 17.1G 179K 22.3G 21.2G 21.2G

dedup = 1.24, compress = 1.05, copies = 1.00, dedup * compress / copies = 1.30Ubuntu: Savings with Deduplication on, compression on : (22.3-17.5)/22.3 = 21.5%

Solaris: Savings with Deduplication on, compression on: (22.3-17.5)/22.3 = 21.5%

Ubuntu: Savings with Deduplication on, compression on with gzip-9: (22.3-17.1)/22.3 = 23.3%

OpenZFS on Ubuntu and Oracle ZFS on Solaris seem to get more or less the exact same results.

Summary and conclusion

Please see the updated results!

The results clearly show that the efficiency when it comes to deduplication does not give me any reason to leave my comfort-zone and abandon Windows for Linux or UNIX.